|

Lorna Mills and Sally McKay

Digital Media Tree this blog's archive OVVLvverk Lorna Mills: Artworks / Persona Volare / contact Sally McKay: GIFS / cv and contact |

View current page

...more recent posts

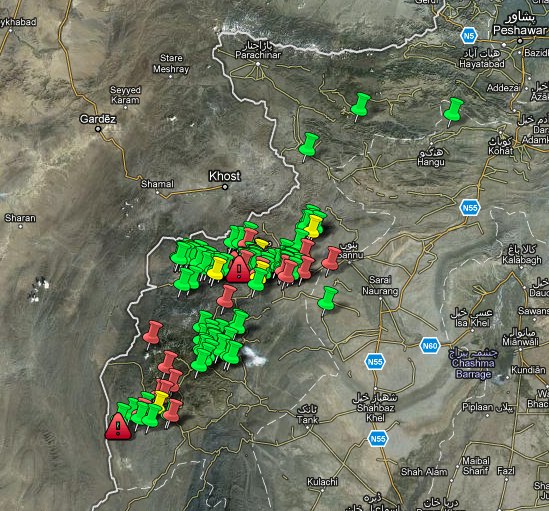

I've been playing with Cleverbot lately. It started when I watched the CBC documentary, Remote Control War (available online). Various experts discussed the inevitability of military deployment of autonomous robots. Right now the US is menacing people on the ground in Pakistan with remote control drones. It's like being buzzed by great big annoying insects that you can't reach with a flyswatter...except they are quite likely to kill you, or your neighbour, or both. The drone's controllers are in the US, sitting at consoles, drinking Starbucks, making decisions and punching the clock. It's bad.  link Autonomous robots would maybe be even worse, as they'd just go on in there and make decisions for themselves about when to kill people and when to hold their fire. The army might soon be able to make a pitch that their robots' AI can, say, distinguish between combatants and civilians, but my understanding of AI research is that such a thing is just not possible. As it is, human's can't even do it. That's why they invented the concept collateral damage. It might be possible to have robots act reliably under controlled, laboratory conditions, but the manifold variables of real-life encounters simply can't be predicted nor programmed for ahead of time. (For more on this, see What Computers Can't Do by Hubert Dreyfus.) There will always be a big margin of error and downright randomness. Hilarious in the movies — remember ED-209? — but truly brown trowsers, brain-altering, high-stress awful if you're stuck on the receiving end. So I went to chit-chat with Cleverbot and see how pop culture AI is coming along. Remember Eliza, the artificial intelligence therapist designed in the 60s? (There's an online version.) I played with Eliza a lot in the 90s and in my opinion, Cleverbot isn't doing a much better job at sounding human than she did. Cb has some potential because it's collecting new data all the time from people who play with it. In this way it's like 20Q, which is pretty impressive. At least, the classic version, which has been played the most, is really really good. But 20Q would be easier to design than Cleverbot because there is a set formula and rigid parameters for how the questions go (20Q means 20 questions). I'd like to know more about the programming behind the syntactical structures in Cleverbot's system. It seems to have Eliza-like strategies of throwing your words back at you in the form of a question. It also throws out random stuff that other people have typed in — often funny and interesting in a totally nonsequitor-ish, Dada kind of way. It has a very poor memory, however, and can't really follow the conversation beyond a few lines. I'm not sure why it's so shallow. But it is remarkably good at grammar! Sometimes words are spelled wrong, and the content makes no sense, but it almost always responds with statements that are structurally valid. All in all, though, it does a poor job of following a train of thought or applying logic in any meaningful way. Cleverbot is fun, and it's flaws are revealing about how language works, but I don't find it convincing, and it actually make me think that info-tainment AI hasn't progressed much since Eliza days. I'm sure that DND probably has all kinds of way more sophisticated work in progress, but if the military tries to tell me that they've got an autonomous robotic system that can reliably adhere to the Geneva convention, I will not believe them. (Not that anybody calling the shots gives a shit about the Geneva convention anymore.) |

Sunday - Bhangra Remixes

Panjabi MC feat. Jay-Z - Beware

Sean Paul - Get Busy

In da Club - 50 Cent Vs Punjabi MC

Party Like a Rockstar

| Just in case anyone reading yesterday's post is thinking that science+philosophy=biological determinism, here's a couple of links where science & philosophy come together to challenge eugenics-type thinking in really exciting ways. Mathematician John Conway talking about his collaborative investigations with Simon Kochen into the nature of free will. (If humans have free will, which is their a priori assumption, then so do elementary particles...kooky and convincing!) Philosopher Alva Noë interviewed by Ginger Campbell on the Brain Science Podcast and making his claim that the brain is necessary but not sufficient for consciousness. ("...your brain is a part of you. Your brain is a part of the mechanism that enables you to be you, but the brain is not all that plays a role. Your body also plays a role, and the environment plays a role. And your environment plays a role thought of in different ways. The physical environment plays a role, but also the cultural environment plays a role.") |

Left to right: Christopher Hitchens, Daniel Dennett, Richard Dawkins, Sam Harris (thanks to Doug Jarvis for the link) Call me a masochist, but I couldn't resist watching this discussion from back in 2007. It starts out funny as they fall all over themselves in a great big orgy of self-congratulatory rhetoric. As it goes on it gets more interesting and ends up downright scary. As my friend B. Smiley pointed out, these guys' main enemies are people who approach religion as if it were science — drawing empirical, causal connections between doctrine and lived experience. The ironic corollary is that these guys treat science as if it were a religious practice demanding converts, faith and followers. The outcome is a really unpleasant ideology. From about 44:58 of part 2, all the way to the end, Harris starts pushing an anti-Islamist agenda. He asks for ideas on how to engineer significant change, practical steps beyond just criticism. Hitchens (who is looking a little tipsy by the end) replies that the forces of theocracy are going to destroy civilization. He continues, "I think it's us, plus the 82nd Airborne and the 101st, who are the real fighters for secularism at the moment. [...] It is only because of the willingness of the United States to combat theocracy that we have any fighting chance at all." Ooops...and, we're out of time. Yikes! My dismay at this conversation follows on the heels of me listening to a Sam Harris Ted Lecture from February 2010. During the Q&A, Harris says "I don't think we need an NSF [National Science Foundation] grant to be able to understand that compulsory veiling is a bad idea. But at a certain point we're going to be able to scan the brains of everyone involved and actually interrogate them. Do people love their daughters just as much in these [Muslim] systems? I think there are clearly right answers to that." Double yikes. Eugenics anyone? Not only would this be an ethically disastrous use of MRI technology, Harris's suggestion also demonstrates a poor understanding of the science behind brain scans. MRI scanners make good research and diagnostic tools — they allow neuroscientists to correlate mental and emotional states with localised brain activity — but they are not mind-reading machines. At least, not if you're doing careful science. Unfortunately, Harris is promoting the kind of slipshod science that can quite easily happen when the powerful members of a society make the ideological decision that some humans are empirically better than others (what Stephen Jay Gould would call "The Mismeasure of Man") and it stinks. |

Only because of the moose.

IT'S FAMILY DAY!!!!!!

Cockweasle

Sunday - Omar Souleyman

music for Syrian TV Bellydance show

Leh Jani

Stephanie Davidson - Relaxational

Andrew J. Paterson is a personal friend, a friend of this blog, and one of the most interesting digital media/video/performance artists around. He's performing live tonight at the opening of his exhibition The Ghosts of Home Entertainment at Trinity Square Video. There is also an artist's talk on the 23rd. Show runs until March 19 (details here). Andrew's work is intellectually demanding. No wait, it's not. It's perceptually stimulating. Oh, no it's kind of boring, people droning on and on. No that's not right, because the scripts are brilliant, funny, caustic, and specifically situated in the context of all-too-familiar art world conversations. With insights. And it's eye candy. And also often sort of psychedelic. Kitsch! No, those ironic media tropes and quotes are actually expressing 100% heart-on-the-sleeve honest-to-goodness love of the medium. Tropes and quotes? Yeah, but those images and that footage are at least 99.9% original. And besides, did I mention how physiologically transfixing it can be? Uh... yup. And also there's historical references, and implications of socio-political networks in which the artist inhabits alternative structures of economy and trade. And loyalty and complicity, cattiness, gossip, sex, loss and love. And did I mention the pretty pictures? Art history -- digital media aesthetics didn't just drop out of the sky, you know. Oh I forgot. Really good dance music. Original music. After all, the guy is an experienced musician and a performer. |

Graphics Interchange Format At Denison University’s Mulberry Gallery with Duncan Alexander, Kevin Bewersdorf, Saul Chernick, Petra Cortright, Stephanie Davidson, dump.fm, MTAA (Tim Whidden and Michael Sarff), Lorna Mills, Tom Moody, Marcin Ramocki, and Spirit Surfers.

Curated by Paddy Johnson

Lorna Mills - Focal

Still blocked.

John Barry: 1933-2011.

The John Barry Seven: Walk Don't Run.

Shirley Bassey: Diamonds are Forever.

Nancy Sinatra: You Only Live Twice.

The Ipcress File.

"The Mozart of the '60s", John Barry brought Dick Dale's surf-guitar sound to the cinema.

He won Academy Awards for the music to: Born Free, The Lion in Winter, Out of Africa and Dances with Wolves

From Sally's link in the comments:

Egypt: Tariq Ramadan & Slavoj Zizek

The Muslim scholar and philosopher discuss the power of popular dissent and the limits of peaceful protest.

Egypt - Thursday evening 9:05 (EET) to woot or not?

The animated pages of Brenna E Murphy

LOOK! AT! MY! DOG!

update from Rob Cruickshank:

email from Julie Voyce:

Kate Wilson - The Afterlife of Buildings at General Hardware Contemporary, 1520 Queen Street West Toronto. Until March 5, 2011.

Untitled studies for a large-scale wall drawing 2010 - 2011 ink and copic pen on paper

VSVSVS

"...and then one day we decided to tickle some animals." - neuroscientist, Jaak Panksepp

Courtesy of Rob Cruickshank:

More info.